VR-Fi: Positioning and Recognizing Hand Gestures via VR-embedded Wi-Fi Sensing

Published in IEEE Transactions on Mobile Computing, 2025

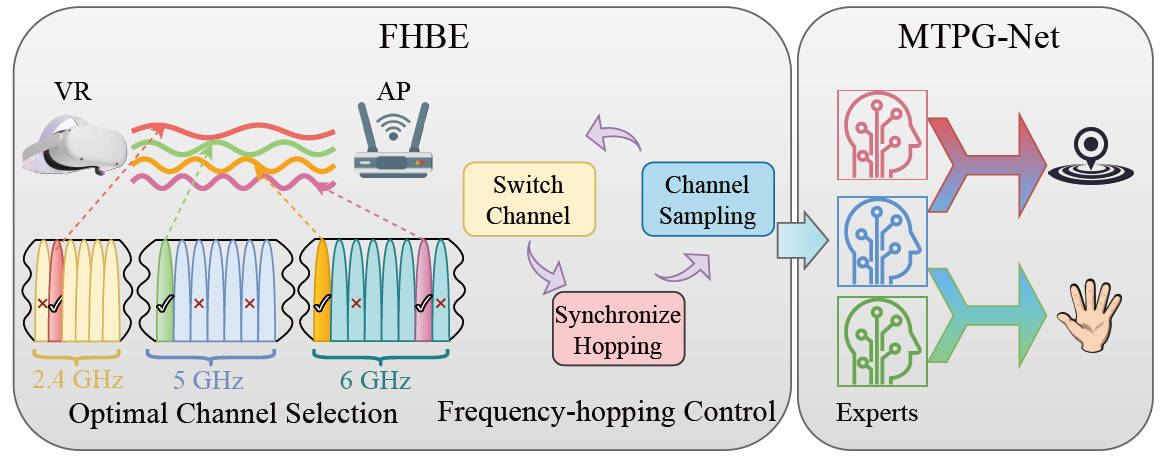

Accurate gesture-based interactions are crucial for enhancing the immersive experience in VR (virtual reality) systems; they in turn necessitate gesture positioning and recognition in physical world. However, existing VR gesture recognition methods are predominantly vision-based, incurring high computational demands and raising privacy concerns. Meanwhile, Wi-Fi-based gesture recognition methods, deemed as promising complement to vision-based ones, typically lack gesture positioning capabilities. To this end, we propose VR-Fi, a gesture positioning and recognition system leveraging VR(-headset)-embedded Wi-Fi. To position gestures across different areas, VR-Fi innovates in a frequency-hopping bandwidth expansion (FHBE) technique to improve spatial resolution for locating a target. Additionally, VR-Fi innovates in neural models to process the FHBE-enhanced Wi-Fi CSI (channel state information) and enable the multi-task requirements of the joint positioning and recognition of hand gestures. Extensive experimental results demonstrate that VR-Fi achieves a positioning accuracy of 94.47%, a recognition accuracy of 92.13%, and a joint accuracy of 89.47%.